Wacky Times for AI

Been a wacky month or two for AI news! Open AI is reorganizing! Apple declined to invest! Whatsisname decided he wanted equity after all! The Governor of CA vetoed an AI safety bill! Microsoft is rebooting Three Mile Island, which feels like a particularly lazy piece of satire from the late 90s that escaped into reality! Study after study keeps showing no measurable benefit to AI deployment! The web is drowning in AI slop that no one likes!

I don’t know what any of that means, but it’s starting to feel like we’re getting real close to the part of The Sting where Kid Twist tells Quint from Jaws something confusing on purpose.

But, in our quest here at Icecano to bring you the best sentences from around the web, I’d like to point you at The Subprime AI Crisis because it includes this truly excellent sentence:

Generative AI must seem kind of magical when your entire life is either being in a meeting or reading an email

Oh Snap!

Elsewhere in that piece it covers the absolutely eye-watering amounts of money being spent on the plagiarism machine. There are bigger problems than the cost; the slop itself, the degraded information environment, the toll on the actual environment. But man, that is a hell of a lot of money to just set on fire to get a bunch of bad pictures no one likes. The opportunity cost is hard to fathom; imagine what that money could have been spent on! Imagine how many actually cool startups that would have launched! Imagine how much real art that would have bought!

But that’s actually not what I’m interested in today, what I am interested in are statements like these:

State of Play: Kobold Press Issues the No AI Pledge

Legendary Mario creator on AI: Nintendo is “going the opposite direction”

I stand by my metaphor that AI is like asbestos, but increasingly it’s also the digital equivalent of High Fructose Corn Syrup. Everyone has accepted that AI stuff is “not as good”, and it’s increasingly treated as low-quality filler, even by the people who are pushing it.

What’s intriguing to me is that companies whose reputation or brand centers around creativity or uniqueness are working hard to openly distance themselves. There’s a real “organic farm” energy, or maybe more of a “restaurant with real food, not fast food.”

Beyond the moral & ethical angle, it gives me hope that “NO AI” is emerging as a viable advertising strategy, in a way that “Made with AI” absolutely isn’t.

Ableist, huh?

Well! Hell of a week to decide I’m done writing about AI for a while!

For everyone playing along at home, NaNoWriMo, the nonprofit that grew up around the National Novel Writing Month challenge, has published a new policy on the use of AI, which includes this absolute jaw-dropper:

We also want to be clear in our belief that the categorical condemnation of Artificial Intelligence has classist and ableist undertones, and that questions around the use of Al tie to questions around privilege.

Really? Lack of access to AI is the only reason “the poors” haven’t been able to write books? This is the thing that’s going to improve access for the disabled? It’s so blatantly “we got a payoff, and we’re using lefty language to deflect criticism,” so disingenuine, and in such bad faith, that the only appropriate reaction is “hahahha Fuck You.”

That said, my absolute favorite response was El Sandifer on Bluesky:

"Fucking dare anyone to tell Alan Moore, to his face, that working class writers need AI in order to create."; immediately followed by "“Who the fuck said that I’ll fucking break his skull open” said William Blake in a 2024 seance."

It’s always a mistake to engage with Bad Faith garbage like this, but I did enjoy these attempts:

You Don't Need AI To Write A Novel - Aftermath

NaNoWriMo Shits The Bed On Artificial Intelligence – Chuck Wendig: Terribleminds

There’s something extra hilarious about the grifters getting to NaNoWriMo—the whole point of writing 50,000 words in a month is not that the world needs more unreadable 50k manuscripts, but that it’s an excuse to practice, you gotta write 50k bad words before you can get to 50k good ones. Using AI here is literally bringing a robot to the gym to lift weights for you.

If you’re the kind of ghoul that wants to use a robot to write a book for you, that’s one (terrible) thing, but using it to “win” a for-fun contest that exists just to provide a community of support for people trying to practice? That’s beyond despicable.

The NaNoWriMo organization has been a mess for a long time, it’s a classic volunteer-run non-profit where the founders have moved on and the replacements have been… poor. It’s been a scandal engine for a decade now, and they’ve fired everyone and brought in new people at least once? And the fix is clearly in; NoNoWiMo got a new Executive Director this year, and the one thing the “AI” “Industry” has at the moment is gobs of money.

I wonder how small the bribe was. Someone got handed a check, excuse me, a “sponsorship”, and I wonder how embarrassingly, enragingly small the number was.

I mean, any amount would be deeply disgusting, but if it was, “all you have to do is sell out the basic principles non-profit you’re now in charge of and you can live in luxury for the rest of your life” that’s still terrible but at least I would understand. But you know, you know, however much money changed hands was pathetically small.

These are the kind of people who should be hounded out of any functional civilization.

And then I wake up to the news that Oprah is going to host a prime time special on The AI? Ahhhh, there we go, that’s starting to smell like a Matt Damon Superbowl Ad. From the guest list—Bill Gates?—it’s pretty clearly some high-profile reputation laundering, although I’m sure Oprah got a bigger paycheck than those suckers at NaNoWriMo. I see the discourse has already decayed through a cycle of “should we pre-judge this” (spoiler: yes) and then landed on whether or not there are still “cool” uses for AI. This is such a dishonest deflection that it almost takes my breath away. Whether or not it’s “cool” is literally the least relevant point. Asbestos was pretty cool too, you know?

And Another Thing… AI Postscript

I thought I was done talking about The AI for a while after last week’s “Why is this Happening” trilogy (Part I, Part II, Part III,) but The AI wasn’t done with me just yet.

First, In one of those great coincidences, Ted Chiang has a new piece on AI in the New Yorker, Why A.I. Isn’t Going to Make Art (and yeah, that’s behind a paywall, but cough).

It’s nice to know Ted C. and I were having the same week last week! It’s the sort of piece where once you start quoting it’s hard to stop, so I’ll quote the bit everyone else has been:

The task that generative A.I. has been most successful at is lowering our expectations, both of the things we read and of ourselves when we write anything for others to read. It is a fundamentally dehumanizing technology because it treats us as less than what we are: creators and apprehenders of meaning. It reduces the amount of intention in the world.

Intention is something he locks onto here; creative work is about making lots of decisions as you do the work which can’t be replaced by a statistical average of past decisions by other people.

Second, continuing the weekend of coincidences, the kids and I went to an Anime convention this past weekend. We went to a panel on storyboarding in animation, which was fascinating, because storyboarding doesn’t quite mean the same thing in animation that it does in live-action movies.

At one point, the speaker was talking about a character in a show he had worked on named “Ai”, and specified he meant the name, not the two letters as an abbreviation, and almost reflexively spitted out “I hate A. I.!” between literally gritted teeth.

Reader, the room—which was packed—roared in approval. It was the kind of noise you’d expect to lead to a pitchfork-wielding mob heading towards the castle above town.

Outside of the more galaxy-brained corners of the wreckage of what used to be called twitter or pockets of techbros, real people in the real world hate this stuff. I can’t think of another technology from my lifetime that has ever gotten a room full of people to do that. Nothing that isn’t armed can be successful against that sort of disgust; I think we’re going to be okay.

Why is this Happening, Part III: Investing in Shares of a Stairway to Heaven

We’ve talked a lot about “The AI” here at Icecano, mostly in terms ranging from “unflattering” to “extremely unflattering.” Which is why I’ve found myself stewing on this question the last few months: Why is this happening?

The easy answer is that, for starters, it’s a scam, a con. That goes hand-in-hand with it also being hype-fueled bubble, which is finally starting to show signs of deflating. We’re not quite at the “Matt Damon in Superbowl ads” phase yet, but I think we’re closer than not to the bubble popping.

Fad-tech bubbles are nothing new in the tech world, in recent memory we had similar grifts around the metaverse, blockchain & “web3”, “quantum”, self-driving cars. (And a whole lot of those bubbles all had the same people behind them as the current one around AI. Lots of the same datacenters full of GPUs, too!) I’m also old enough to remember similar bubbles around things like bittorrent, “4gl languages”, two or three cycles on VR, 3D TV.

This one has been different, though. There’s a viciousness to the boosters, a barely contained glee at the idea that this will put people out of work, which has been matched in intensity by the pushback. To put all that another way, when ELIZA came out, no one from MIT openly delighted at the idea that they were about to put all the therapists out of work.

But what is it about this one, though? Why did this ignite in a way that those others didn’t?

A sentiment I see a lot, as a response to AI skepticism, is to say something like “no no, this is real, it’s happening.” And the correct response to that is to say that, well, asbestos pajamas really didn’t catch fire, either. Then what happened? Just because AI is “real” it doesn’t mean it’s “good”. Those mesothelioma ads aren’t because asbestos wasn’t real.

(Again, these tend to be the same people who a few years back had a straight face when they said they were “bullish on bitcoin.”)

But I there’s another sentiment I see a lot that I think is standing behind that one: that this is the “last new tech we’ll see in our careers”. This tends to come from younger Xers & elder Millennials, folks who were just slightly too young to make it rich in the dot com boom, but old enough that they thought they were going to.

I think this one is interesting, because it illuminates part of how things have changed. From the late 70s through sometime in the 00s, new stuff showed up constantly, and more importantly, the new stuff was always better. There’s a joke from the 90s that goes like this: Two teams each developed a piece of software that didn’t run well enough on home computers. The first team spent months sweating blood, working around the clock to improve performance. The second team went and sat on a beach. Then, six months later, both teams bought new computers. And on those new machines, both systems ran great. So who did a better job? Who did a smarter job?

We all got absolutely hooked on the dopamine rush of new stuff, and it’s easy to see why; I mean, there were three extra verses of “We Didn’t Light the Fire” just in the 90s alone.

But a weird side effect is that as a culture of practitioners, we never really learned how to tell if the new thing was better than the old thing. This isn’t a new observation, Microsoft figured out to weaponize this early on as Fire And Motion. And I think this has really driven the software industry’s tendency towards “fad-oriented development,” we never built up a herd immunity to shiny new things.

A big part of this, of course, is that the press tech profoundly failed. A completely un-skeptical, overly gullible press that was infatuated shiny gadgets foisted a whole parade of con artists and scamtech on all of us, abdicating any duty they had to investigate accurately instead of just laundering press releases. The Professionally Surprised.

And for a long while, that was all okay, the occasional CueCat notwithstanding, because new stuff generally was better, and even if was only marginally better, there was often a lot of money to be made by jumping in early. Maybe not “private island” money, but at least “retire early to the foothills” money.

But then somewhere between the Dot Com Crash and the Great Recession, things slowed down. Those two events didn’t help much, but also somewhere in there “computers” plateaued at “pretty good”. Mobile kept the party going for a while, but then that slowed down too.

My Mom tells a story about being a teenager while the Beatles were around, and how she grew up in a world where every nine months pop music was reinvented, like clockwork. Then the Beatles broke up, the 70s hit, and that all stopped. And she’s pretty open about how much she misses that whole era; the heady “anything can happen” rush. I know the feeling.

If your whole identity and worldview about computers as a profession is wrapped up in diving into a Big New Thing every couple of years, it’s strange to have it settle down a little. To maintain. To have to assess. And so it’s easy to find yourself grasping for what the Next Thing is, to try and get back that feeling of the whole world constantly reinventing itself.

But missing the heyday of the PC boom isn’t the reason that AI took off. But it provides a pretty good set of excuses to cover the real reasons.

Is there a difference between “The AI” and “Robots?” I think, broadly, the answer is “no;” but they’re different lenses on the same idea. There is an interesting difference between “robot” (we built it to sit outside in the back seat of the spaceship and fix engines while getting shot at) and “the AI” (write my email for me), but that’s more about evolving stories about which is the stuff that sucks than a deep philosophical difference.

There’s a “creative” vs “mechanical” difference too. If we could build an artificial person like C-3PO I’m not sure that having it wash dishes would be the best or most appropriate possible use, but I like that as an example because, rounding to the nearest significant digit, that’s an activity no one enjoys, and as an activity it’s not exactly a hotbed of innovative new techniques. It’s the sort of chore it would be great if you could just hand off to someone. I joke this is one of the main reasons to have kids, so you can trick them into doing chores for you.

However, once “robots” went all-digital and became “the AI”, they started having access to this creative space instead of the physical-mechanical one, and the whole field backed into a moral hazard I’m not sure they noticed ahead of time.

There’s a world of difference between “better clone stamp in photoshop” and “look, we automatically made an entire website full of fake recipes to farm ad clicks”; and it turns out there’s this weird grifter class that can’t tell the difference.

Gesturing back at a century of science fiction thought experiments about robots, being able to make creative art of any kind was nearly always treated as an indicator that the robot wasn’t just “a robot.” I’ll single out Asimov’s Bicentennial Man as an early representative example—the titular robot learns how to make art, and this both causes the manufacturer to redesign future robots to prevent this happening again, and sets him on a path towards trying to be a “real person.”

We make fun of the Torment Nexus a lot, but it keeps happening—techbros keep misunderstanding the point behind the fiction they grew up on.

Unless I’m hugely misinformed, there isn’t a mass of people clamoring to wash dishes, kids don’t grow up fantasizing about a future in vacuuming. Conversely, it’s not like there’s a shortage of people who want to make a living writing, making art, doing journalism, being creative. The market is flooded with people desperate to make a living doing the fun part. So why did people who would never do that work decide that was the stuff that sucked and needed to be automated away?

So, finally: why?

I think there are several causes, all tangled.

These causes are adjacent to but not the same as the root causes of the greater enshittification—excuse me, “Platform Decay”—of the web. Nor are we talking about the largely orthogonal reasons why Facebook is full of old people being fooled by obvious AI glop. We’re interested in why the people making these AI tools are making them. Why they decided that this was the stuff that sucked.

First, we have this weird cultural stew where creative jobs are “desired” but not “desirable”. There’s a lot of cultural cachet around being a “creator” or having a “creative” jobs, but not a lot of respect for the people actually doing them. So you get the thing where people oppose the writer’s strike because they “need” a steady supply of TV, but the people who make it don’t deserve a living wage.

Graeber has a whole bit adjacent to this in Bullshit Jobs. Quoting the originating essay:

It's even clearer in the US, where Republicans have had remarkable success mobilizing resentment against school teachers, or auto workers (and not, significantly, against the school administrators or auto industry managers who actually cause the problems) for their supposedly bloated wages and benefits. It's as if they are being told ‘but you get to teach children! Or make cars! You get to have real jobs! And on top of that you have the nerve to also expect middle-class pensions and health care?’

“I made this” has cultural power. “I wrote a book,” “I made a movie,” are the sort of things you can say at a party that get people to perk up; “oh really? Tell me more!”

Add to this thirty-plus years of pressure to restructure public education around “STEM”, because those are the “real” and “valuable” skills that lead to “good jobs”, as if the only point of education was as a job training program. A very narrow job training program, because again, we need those TV shows but don’t care to support new people learning how to make them.

There’s always a class of people who think they should be able to buy anything; any skill someone else has acquired is something they should be able to purchase. This feels like a place I could put several paragraphs that use the word “neoliberalism” and then quote from Ayn Rand, The Incredibles, or Led Zeppelin lyrics depending on the vibe I was going for, but instead I’m just going to say “you know, the kind of people who only bought the Cliffs Notes, never the real book,” and trust you know what I mean. The kind of people who never learned the difference between “productivity hacks” and “cheating”.

The sort of people who only interact with books as a source of isolated nuggets of information, the kind of people who look at a pile of books and say something like “I wish I had access to all that information,” instead of “I want to read those.”

People who think money should count at least as much, if not more than, social skills or talent.

On top of all that, we have the financializtion of everything. Hobbies for their own sake are not acceptable, everything has to be a side hustle. How can I use this to make money? Why is this worth doing if I can’t do it well enough to sell it? Is there a bootcamp? A video tutorial? How fast can I start making money at this?

Finally, and critically, I think there’s a large mass of people working in software that don’t like their jobs and aren’t that great at them. I can’t speak for other industries first hand, but the tech world is full of folks who really don’t like their jobs, but they really like the money and being able to pretend they’re the masters of the universe.

All things considered, “making computers do things” is a pretty great gig. In the world of Professional Careers, software sits at the sweet spot of “amount you actually have to know & how much school you really need” vs “how much you get paid”.

I’ve said many times that I feel very fortunate that the thing I got super interested in when I was twelve happened to turn into a fully functional career when I hit my twenties. Not everyone gets that! And more importantly, there are a lot of people making those computers do things who didn’t get super interested in computers when they were twelve, because the thing they got super interested in doesn’t pay for a mortgage.

Look, if you need a good job, and maybe aren’t really interested in anything specific, or at least in anything that people will pay for, “computers”—or computer-adjacent—is a pretty sweet direction for your parents to point you. I’ve worked with more of these than I can count—developers, designers, architects, product people, project managers, middle managers—and most of them are perfectly fine people, doing a job they’re a little bored by, and then they go home and do something that they can actually self-actualize about. And I suspect this is true for a lot of “sit down inside email jobs,” that there’s a large mass of people who, in a just universe, their job would be “beach” or “guitar” or “games”, but instead they gotta help knock out front-end web code for a mid-list insurance company. Probably, most careers are like that, there’s the one accountant that loves it, and then a couple other guys counting down the hours until their band’s next unpaid gig.

But one of the things that makes computers stand out is that those accountants all had to get certified. The computer guys just needed a bootcamp and a couple weekends worth of video tutorials, and suddenly they get to put “Engineer” on their resume.

And let’s be honest: software should be creative, usually is marketed as such, but frequently isn’t. We like to talk about software development as if it’s nothing but innovation and “putting a dent in the universe”, but the real day-to-day is pulling another underwritten story off the backlog that claims to be easy but is going to take a whole week to write one more DTO, or web UI widget, or RESTful API that’s almost, but not quite, entirely unlike the last dozen of those. Another user-submitted bug caused by someone doing something stupid that the code that got written badly and shipped early couldn’t handle. Another change to government regulations that’s going to cause a remodel of the guts of this thing, which somehow manages to be a surprise despite the fact the law was passed before anyone in this meeting even started working here.

They don’t have time to learn how that regulation works, or why it changed, or how the data objects were supposed to happen, or what the right way to do that UI widget is—the story is only three points, get it out the door or our velocity will slip!—so they find someting they can copy, slap something together, write a test that passes, ship it. Move on to the next. Peel another one off the backlog. Keep that going. Forever.

And that also leads to this weird thing software has where everyone is just kind of bluffing everyone all the time, or at least until they can go look something up on stack overflow. No one really understands anything, just gotta keep the feature factory humming.

The people who actually like this stuff, who got into it because they liked making compteurs do things for their own sake keep finding ways to make it fun, or at least different. “Continuous Improvement,” we call it. Or, you know, they move on, leaving behind all those people whose twelve-year old selves would be horrified.

But then there’s the group that’s in the center of the Venn Diagram of everything above. All this mixes together, and in a certain kind of reduced-empathy individual, manifests as a fundamental disbelief in craft as a concept. Deep down, they really don’t believe expertise exists. That “expertise” and “bias” are synonyms. They look at people who are “good” at their jobs, who seem “satisfied” and are jealous of how well that person is executing the con.

Whatever they were into at twelve didn’t turn into a career, and they learned the wrong lesson from that. The kind of people who were in a band as a teenager and then spent the years since as a management consultant, and think the only problem with that is that they ever wanted to be in a band, instead of being mad that society has more open positions for management consultants than bass players.

They know which is the stuff that sucks: everything. None of this is the fun part; the fun part doesn’t even exist; that was a lie they believed as a kid. So they keep trying to build things where they don’t have to do their jobs anymore but still get paid gobs of money.

They dislike their jobs so much, they can’t believe anyone else likes theirs. They don’t believe expertise or skill is real, because they have none. They think everything is a con because thats what they do. Anything you can’t just buy must be a trick of some kind.

(Yeah, the trick is called “practice”.)

These aren’t people who think that critically about their own field, which is another thing that happens when you value STEM over everything else, and forget to teach people ethics and critical thinking.

Really, all they want to be are “Idea Guys”, tossing off half-baked concepts and surrounded by people they don’t have to respect and who wont talk back, who will figure out how to make a functional version of their ill-formed ramblings. That they can take credit for.

And this gets to the heart of whats so evil about the current crop of AI.

These aren’t tools built by the people who do the work to automate the boring parts of their own work; these are built by people who don’t value creative work at all and want to be rid of it.

As a point of comparison, the iPod was clearly made by people who listened to a lot of music and wanted a better way to do so. Apple has always been unique in the tech space in that it works more like a consumer electronics company, the vast majority of it’s products are clearly made by people who would themselves be an enthusiastic customer. In this field we talk about “eating your own dog-food” a lot, but if you’re writing a claims processing system for an insurance company, there’s only so far you can go. Making a better digital music player? That lets you think different.

But no: AI is all being built by people who don’t create, who resent having to create, who resent having to hire people who can create. Beyond even “I should be able to buy expertise” and into “I value this so little that I don’t even recognize this as a real skill”.

One of the first things these people tried to automate away was writing code—their own jobs. These people respect skill, expertise, craft so little that they don’t even respect their own. They dislike their jobs so much, and respect their own skills so little, that they can’t imagine that someone might not feel that way about their own.

A common pattern has been how surprised the techbros have been at the pushback. One of the funnier (in a laugh so you don’t cry way) sideshows is the way the techbros keep going “look, you don’t have to write anymore!” and every writer everywhere is all “ummmmm, I write because I like it, why would I want to stop” and then it just cuts back and forth between the two groups saying “what?” louder and angrier.

We’re really starting to pay for the fact that our civilization spent 20-plus years shoving kids that didn’t like programming into the career because it paid well and you could do it sitting down inside and didn’t have to be that great at it.

What future are they building for themselves? What future do they expect to live in, with this bold AI-powered utopia? Some vague middle-management “Idea Guy” economy, with the worst people in the world summoning books and art and movies out of thin air for no one to read or look at or watch, because everyone else is doing the same thing? A web full of AI slop made by and for robots trying to trick each other? Meanwhile the dishes are piling up? That’s the utopia?

I’m not sure they even know what they want, they just want to stop doing the stuff that sucks.

And I think that’s our way out of this.

What do we do?

For starters, AI Companies need to be regulated, preferably out of existence. There’s a flavor of libertarian-leaning engineer that likes to say things like “code is law,” but actually, turns out “law” is law. There’s whole swathes of this that we as a civilization should have no tolerance for; maybe not to a full Butlerian Jihad, but at least enough to send deepfakes back to the Abyss. We dealt with CFCs and asbestos, we can deal with this.

Education needs to be less STEM-focused. We need to carve out more career paths (not “jobs”, not “gigs”, “careers”) that have the benefits of tech but aren’t tech. And we need to furiously defend and expand spaces for creative work to flourish. And for that work to get paid.

But those are broad, society-wide changes. But what can those of us in the tech world actually do? How can we help solve these problems in our own little corners? We can we go into work tomorrow and actually do?

It’s on all of us in the tech world to make sure there’s less of the stuff that sucks.

We can’t do much about the lack of jobs for dance majors, but we can help make sure those people don’t stop believing in skill as a concept. Instead of assuming what we think sucks is what everyone thinks sucks, is there a way to make it not suck? Is there a way to find a person who doesn’t think it sucks? (And no, I don’t mean “Uber for writing my emails”) We gotta invite people in and make sure they see the fun part.

The actual practice of software has become deeply dehumanizing. None of what I just spent a week describing is the result of healthy people working in a field they enjoy, doing work they value. This is the challenge we have before us, how can we change course so that the tech industry doesn’t breed this. Those of us that got lucky at twelve need to find new ways to bring along the people who didn’t.

With that in mind, next Friday on Icecano we start a new series on growing better software.

Several people provided invaluable feedback on earlier iterations of this material; you all know who you are and thank you.

And as a final note, I’d like to personally apologize to the one person who I know for sure clicked Open in New Tab on every single link. Sorry man, they’re good tabs!

Why is this Happening, Part II: Letting Computers Do The Fun Part

Previously: Part I

Let’s leave the Stuff that Sucks aside for the moment, and ask a different question. Which Part is the Fun Part? What are we going to do with this time the robots have freed up for us?

It’s easy to get wrapped up in pointing at the parts of living that suck; especially when fantasizing about assigning work to C-3PO’s cousin. And it’s easy to spiral to a place where you just start waving your hands around at everything.

But even Bertie Wooster had things he enjoyed, that he occasionally got paid for, rather than let Jeeves work his jaw for him.

So it’s worth recalibrating for a moment: which are the fun parts?

As aggravating as it can be at times, I do actually like making computers do things. I like programming, I like designing software, I like building systems. I like finding clever solutions to problems. I got into this career on purpose. If it was fun all the time they wouldn’t have to call it “work”, but it’s fun a whole lot of the time.

I like writing (obviously.) For me, that dovetails pretty nicely with liking to design software; I’m generally the guy who ends up writing specs or design docs. It’s fun! I owned the customer-facing documentation several jobs back. It was fun!

I like to draw! I’m not great at it, but I’m also not trying to make a living out of it. I think having hobbies you enjoy but aren’t great at is a good thing. Not every skill needs to have a direct line to a career or a side hustle. Draw goofy robots to make your kids laugh! You don’t need to have to figure out a the monetization strategy.

In my “outside of work” life I think I know more writers and artists than programmers. For all of them, the work itself—the writing, the drawing, the music, making the movie—is the fun part. The parts they don’t like so well is the “figuring out how to get paid” part, or the dealing with printers part, or the weird contracts part. The hustle. Or, you know, the doing dishes, laundry, and vacuuming part. The “chores” part.

So every time I see a new “AI tool” release that writes text or generates images or makes video, I always as the same question:

Why would I let the computer do the fun part?

The writing is the fun part! The drawing pictures is the fun part! Writing the computer programs are the fun part! Why, why, are they trying to tell us that those are the parts that suck?

Why are the techbros trying to automate away the work people want to do?

It’s fun, and I worked hard to get good at it! Now they want me to let a robot do it?

Generative AI only seems impressive if you’ve never successfully created anything. Part of what makes “AI art” so enragingly radicalizing is the sight of someone whose never tried to create something before, never studied, never practiced, never put the time in, never really even thought about it, joylessly showing off their terrible AI slop they made and demanding to be treated as if they made it themselves, not that they used a tool built on the fruits of a million million stolen works.

Inspiration and plagiarism are not the same thing, the same way that “building a statistical model of word order probability from stuff we downloaded from the web” is not the same as “learning”. A plagiarism machine is not an artist.

But no, the really enraging part is watching these people show off this garbage realizing that these people can’t tell the difference. And AI art seems to be getting worse, AI pictures are getting easier spot, not harder, because of course it is, because the people making the systems don’t know what good is. And the culture is following: “it looks like AI made it” has become the exact opposite of a compliment. AI-generated glop is seen as tacky, low quality. And more importantly, seen as cheap, made by someone who wasn’t willing to spend any money on the real thing. Trying to pass off Krusty Burgers as their own cooking.

These are people with absolutely no taste, and I don’t mean people who don’t have a favorite Kurosawa film, I mean people who order a $50 steak well done and then drown it in A1 sauce. The kind of people who, deep down, don’t believe “good” is real. That it’s all just “marketing.”

The act of creation is inherently valuable; creation is an act that changes the creator as much as anyone. Writing things down isn’t just documentation, it’s a process that allows and enables the writer to discover what they think, explore how they actually feel.

“Having AI write that for you is like having a robot lift weights for you.”

AI writing is deeply dehumanizing, to both the person who prompted it and to the reader. There is so much weird stuff to unpack from someone saying, in what appears to be total sincerity, that they used AI to write a book. That the part they thought sucked was the fun part, the writing, and left their time free for… what? Marketing? Uploading metadata to Amazon? If you don’t want to write, why do you want people to call you a writer?

Why on earth would I want to read something the author couldn’t be bothered to write? Do these ghouls really just want the social credit for being “an artist”? Who are they trying to impress, what new parties do they think they’re going to get into because they have a self-published AI-written book with their name on it? Talk about participation trophies.

All the people I know in real life or follow on the feeds who use computers to do their thing but don’t consider themselves “computer people” have reacted with a strong and consistant full-body disgust. Personally, compared to all those past bubbles, this is the first tech I’ve ever encountered where my reaction was complete revulsion.

Meanwhile, many (not all) of the “computer people” in my orbit tend to be at-least AI curious, lots of hedging like “it’s useful in some cases” or “it’s inevitable” or full-blown enthusiasm.

One side, “absolutely not”, the other side, “well, mayyybe?” As a point of reference, this was the exact breakdown of how these same people reacted to blockchain and bitcoin.

One group looks at the other and sees people musing about if the face-eating leopard has some good points. The other group looks at the first and sees a bunch of neo-luddites. Of course, the correct reaction to that is “you’re absolutely correct, but not for the reasons you think.”

There’s a Douglas Adams bit that gets quoted a lot lately, which was printed in Salmon of Doubt but I think was around before that:

I’ve come up with a set of rules that describe our reactions to technologies:

Anything that is in the world when you’re born is normal and ordinary and is just a natural part of the way the world works.

Anything that’s invented between when you’re fifteen and thirty-five is new and exciting and revolutionary and you can probably get a career in it.

Anything invented after you’re thirty-five is against the natural order of things.

The better-read AI-grifters keep pointing at rule 3. But I keep thinking of the bit from Dirk Gently’s Detective Agency about the Electric Monk:

The Electric Monk was a labour-saving device, like a dishwasher or a video recorder. Dishwashers washed tedious dishes for you, thus saving you the bother of washing them yourself, video recorders watched tedious television for you, thus saving you the bother of looking at it yourself; Electric Monks believed things for you, thus saving you what was becoming an increasingly onerous task, that of believing all the things the world expected you to believe.

So, what are the people who own the Monks doing, then?

Let’s speak plainly for a moment—the tech industry has always had a certain…. ethical flexibility. The “things” in “move fast and break things” wasn’t talking about furniture or fancy vases, this isn’t just playing baseball inside the house. And this has been true for a long time, the Open Letter to Hobbyists was basically Gates complaining that other people’s theft was undermining the con he was running.

We all liked to pretend “disruption” was about finding “market inefficiencies” or whatever, but mostly what that meant was moving in to a market where the incumbents were regulated and labor had legal protection and finding a way to do business there while ignoring the rules. Only a psychopath thinks “having to pay employees” is an “inefficiency.”

Vast chunks of what it takes to make generative AI possible are already illegal or at least highly unethical. The Internet has always been governed by a sort of combination of gentleman’s agreements and pirate codes, and in the hunger for new training data, the AI companies have sucked up everything, copyright, licensing, and good neighborship be damned.

There’s some half-hearted attempts to combat AI via arguments that it violates copyright or open source licensing or other legal approach. And more power to them! Personally, I’m not really interested in the argument the AI training data violates contract law, because I care more about the fact that it’s deeply immoral. See that Vonnegut line about “those who devised means of getting paid enormously for committing crimes against which no laws had been passed.” Much like I think people who drive too fast in front of schools should get a ticket, sure, but I’m not opposed to that action because it was illegal, but because it was dangerous to the kids.

It’s been pointed out more than once that AI breaks the deal behind webcrawlers and search—search engines are allowed to suck up everyone’s content in exchange for sending traffic their way. But AI just takes and regurgitates, without sharing the traffic, or even the credit. It’s the AI Search Doomsday Cult. Even Uber didn’t try to put car manufacturers out of business.

But beyond all that, making things is fun! Making things for other people is fun! It’s about making a connection between people, not about formal correctness or commercial viability. And then you see those terrible google fan letter ads at the olympics, or see people crowing that they used AI to generate a kids book for their children, and you wonder, how can these people have so little regard for their audience that they don’t want to make the connection themselves? That they’d rather give their kids something a jumped-up spreadsheet full of stolen words barfed out instead of something they made themselves? Why pass on the fun part, just so you can take credit for something thoughtless and tacky? The AI ads want you to believe that you need their help to find “the right word”; what thay don’t tell you is that no you don’t, what you need to do is have fun finding your word.

Robots turned out to be hard. Actually, properly hard. You can read these papers by computer researchers in the fifties where they’re pretty sure Threepio-style robot butlers are only 20 years away, which seems laughable now. Robots are the kind of hard where the more we learn the harder they seem.

As an example: Doctor Who in the early 80s added a robot character who was played by the prototype of an actual robot. This went about as poorly as you might imagine. That’s impossible to imagine now, no producer would risk their production on a homemade robot today, matter how impressive the demo was. You want a thing that looks like Threepio walking around and talking with a voice like a Transformer? Put a guy in a suit. Actors are much easier to work with. Even though they have a union.

Similarly, “General AI” in the HAL/KITT/Threepio sense has been permanently 20 years in the future for at least 70 years now. The AI class I took in the 90s was essentially a survey of things that hadn’t worked, and ended with a kind of shrug and “maybe another 20?”

Humans are really, really good at seeing faces in things, and finding patterns that aren’t there. Any halfway decent professional programmer can whip up an ELIZA clone in an afternoon, and even knowing how the trick works it “feels” smarter than it is. A lot of AI research projects are like that, a sleight-of-hand trick that depends on doing a lot of math quickly and on the human capacity to anthropomorphize. And then the self-described brightest minds of our generation fail the mirror test over and over.

Actually building a thing that can “think”? Increasingly seems impossible.

You know what’s easy, though, comparatively speaking? Building a statistical model of all the text you can pull off the web.

On Friday: conclusions, such as they are.

Why is this Happening, Part I: The Stuff That Sucks

When I was a kid, I had this book called The Star Wars Book of Robots. It was a classic early-80s kids pop-science book; kids are into robots, so let’s have a book talking about what kinds of robots existed at the time, and then what kinds of robots might exist in the future. At the time, Star Wars was the spoonful of sugar to help education go down, so every page talked about a different kind of robot, and then the illustration was a painting of that kind of robot going about its day while C-3PO, R2-D2, and occasionally someone in 1970s leisureware looked on. So you’d have one of those car factory robot arms putting a sedan together while the droids stood off to the side with a sort of “when is Uncle Larry finally going to retire?” energy.

The image from that book that has stuck with me for four decades is the one at the top of this page: Threepio, trying to do the dishes while vacuuming, and having the situation go full slapstick. (As a kid, I was really worried that the soap suds were going to get into his bare midriff there and cause electrical damage, which should be all you need to know to guess exactly what kind of kid I was at 6.)

Nearly all the examples in the book were of some kind of physical labor; delivering mail, welding cars together, doing the dishes, going to physically hostile places. And at the time, this was the standard pop-culture job for robots “in the future”, that robots and robotic automation were fundamentally physical, and were about relieving humans from mechanical labor.

The message is clear: in the not to distant future we’re all going to have some kind of robotic butler or maid or handyman around the house, and that robot is going to do all the Stuff That Sucks. Dishes, chores, laundry, assorted car assembly, whatever it is you don’t want to do, the robot will handle for you.

I’ve been thinking about this a lot over the last year and change since “Generative AI” became a phrase we were all forced to learn. And what’s interesting to me is the way that the sales pitch has evolved around which is the stuff that sucks.

Robots, as a storytelling construct, have always been a thematically rich metaphor in this regard, and provide an interesting social diagnostic. You can tell a lot about what a society thinks is “the stuff that sucks” by looking at both what the robots and the people around them are doing. The work that brought us the word “robot” itself represented them as artificially constructed laborers who revolted against their creators.

Asimov’s body of work, which was the first to treat robots as something industrial and coined the term “robotics” mostly represented them as doing manual labor in places too dangerous for humans while the humans sat around doing science or supervision. But Asimov’s robots also were always shown to be smarter and more individualistic than the humans believed, and generally found a way to do what they wanted to do, regardless of the restrictions from the “Laws of Robotics.”

Even in Star Wars, which buries the political content low in the mix, it’s the droids where the dark satire from THX-1138 pokes through; robots are there as a permanent servant class doing dangerous work either on the outside of spaceships or translating for crime bosses, are the only group shown to be discriminated against, and have otherwise unambiguous “good guys” ordering mind wipes of, despite consistently being some of the smartest and most capable characters.

And then, you know, Blade Runner.

There’s a lot of social anxiety wrapped up in all this. Post-industrial revolution, the expanding middle classes wanted the same kinds of servants and “domestic staff” as the upper classes had. Wouldn’t it be nice to have a butler, a valet, some “staff?” That you didn’t have to worry about?

This is the era of Jeeves & Wooster, and who wouldn’t want a “gentleman’s gentleman” to do the work around the house, make you a hangover cure, drive the car, get you out of scrapes, all while you frittered your time away with idiot friends?

(Of course, I’m sure it’s a total coincidence this is also the period where the Marxists & Socialist thinkers really got going.)

But that stayed asperational, rather than possible, and especially post-World War II, the culture landed on sending women back home and depending on the stay-at-home mom handle “all that.”

There’s a lot of “robot butlers” in mid-century fiction, because how nice would it be if you could just go to the store and buy that robot from The Jetsons, free from any guilt? There’s a lot to unpack there, but that desire for a guilt-free servant class was, and is, persistant in fiction.

Somewhere along the lines, this changes, and robots stop being manual labor or the domestic staff, and start being secretaries, executive assistants. For example, by the time Star Trek: The Next Generation rolls around in the mid-80s, part of the fully automated luxury space communism of the Federation is that the Enterprise computer is basically the perfect secretary—making calls, taking dictation, and doing research. Even by the then it was clear that there was a whole lot of “stuff to know”, and so robots find themselves acting as research assistants. Partly, this is a narrative accelerant—having the Shakespearian actor able to ask thin air for the next plot point helps move things along pretty fast—but the anxiety about information overload was there, even then. Imagine if you could just ask somebody to look it up for you! (Star Trek as a whole is an endless Torment Nexus factory, but that’s a whole other story.)

I’ve been reading a book about the history of keyboards, and one of the more intersting side stories is the way “typing” has interacted with gender roles over the last century. For most of the 1900s, “typing” was a woman’s job, and men, who were of course the bosses, didn’t have time for that sort of tediousness. They’re Idea Guys, and the stuff that sucks is wrestling with an actual typewriter to write them down.

So, they would either handwrite things they needed typed and send it down to the “typing pool”, or dictate to a secretary, who would type it up. Typing becomes a viable job out of the house for younger or unmarried women, albeit one without an actual career path.

This arrangement lasted well into the 80s, and up until then the only men who typed themselves were either writers or nerds. Then computers happened, PCs landed on men’s desks, and it turns out the only thing more powerful than sexism was the desire to cut costs, so men found themselves typing their own emails. (Although, this transition spawns the most unwittingly enlightening quote in the whole book, where someone who was an executive at the time of the transition says it didn’t really matter, because “Feminism ruined everything fun about having a secretary”. pikachu shocked face dot gif)

But we have a pattern; work that would have been done by servants gets handed off to women, and then back to men, and then fiction starts showing up fantasizing about giving that work to a robot, who won’t complain, or have an opinion—or start a union.

Meanwhile, in parallel with all this “chat bots” have been cooking along for as long as there have been computers. Typing at a computer and getting a human-like response was an obvious interface, and spawned a whole set of thought similar but adjacent to all those physical robots. ELIZA emerged almost as soon as computers were able to support such a thing. The Turing test assumes a chat interface. “Software Agents” become a viable area of research. The Infocom text adventure parser came out of the MIT AI lab. What if your secretary was just a page of text on your screen?

One of the ways that thread evolved emerged as LLMs and “Generative AI”. And thanks to the amount of VC being poured in, we get the last couple of years of AI slop. And also a hype cycle that says that any tech company that doesn’t go all-in on “the AI” is going to be left in the dust. It’s the Next Big Thing!

Flash forward to Apple’s Worldwide Developer Conference earlier this summer. The Discourse going into WWDC was that Apple was “behind on AI” and needed to catch up to the industry, although does it really count as behind if all your competitors are up over their skis? And so far AI has been extremely unprofitable, and if anything, Apple is a company that only ships products it knows it can make money on.

The result was that they rolled out the most comprehensive vision of how a Gen AI–powered product suite looks here in 2024. In many ways, “Apple Intelligence” was Apple doing what they do best—namely, doing their market research via letting their erstwhile competitors skid into a ditch, and then slide in with a full Second Mover Advantage by saying “so, now do you want something that works?”

They’re very, very good at identifying The Stuff That Sucks, and announcing that they have a solution. So what stuff was it? Writing text, sending pictures, communicating with other people. All done by a faceless, neutral, “assistant,” who you didn’t have to engage with like they were a person, just a fancy piece of software. Computer! Tea, Earl Gray! Hot!

I’m writing about a marketing event from months ago because watching their giant infomercial was where something clicked for me. They spent an hour talking about speed, “look how much faster you can do stuff!” “You don’t have to write your own text, draw your own pictures, send your own emails, interact directly with anyone!”

Left unsaid was what you were saving all that time for. Critically, they didn’t annouce they were going to a 4-day work week or 6-hour days, all this AI was so people could do more “real work”. Except that the “stuff that sucks” was… that work? What’s the vision of what we’ll be doing when we’ve handed off all this stuff that sucks?

Who is building this stuff? What future do they expect to live in, with this bold AI-powered economy? What are we saving all this time for? What future do these people want? Why are these the things they have decided suck?

I was struck, not for the first time, by what a weird dystopia we find ourselves in: “we gutted public education and non-STEM subjects like writing and drawing, and everyone is so overworked they need a secretary but can’t afford one, so here’s a robot!”

To sharpen the point: why in the fuck am I here doing the dishes myself while a bunch of techbros raise billions of dollars to automate the art and poetry? What happened to Threepio up there? Why is this the AI that’s happening?

On Wednesday, we start kicking over rocks to find an answer...

And Another Thing: Pianos

I thought I had said everything I had to say about that Crush ad, but… I keep thinking about the Piano.

One of the items crushed by the hydraulic press into the new iPad was an upright piano. A pretty nice looking one! There was some speculation at first about how much of that ad was “real” vs CG, but the apology didn’t include Apple reassuring everyone that it wasn’t a real piano, I have to assume they really did sacrifice a perfectly good upright piano for a commercial. Which is sad, and stupid expensive, but not the point.

I grew up in a house with, and I swear I am not making this up, two pianos. One was an upright not unlike the one in the ad—that piano has since found a new home, and lives at my uncle’s house now. The other piano is a gorgeous baby grand. It’s been the centerpiece of my parent’s living room for forty-plus years now. It was the piano in my mom’s house when she was a little girl, and I think it belonged to her grandparents before that. If I’m doing my math right, it’s pushing 80 or so years old. It hasn’t been tuned since the mid-90s, but it still sounds great. The pedals are getting a little soft, there’s some “battle damage” here and there, but it’s still incredible. It’s getting old, but barring any looney tunes–style accidents, it’ll still be helping toddlers learn chopsticks in another 80 years.

My point is: This piano is beloved. My cousins would come over just so they could play it. We’ve got pictures of basically every family member for four generations sitting at, on, or around it. Everyone has played it. It’s currently covered in framed pictures of the family, in some cases with pictures of little kids next to pictures of their parents at the same age. When estate planning comes up, as it does from time to time, this piano gets as much discussion as just about everything else combined. I am, by several orders of magnatude, the least musically adept member of my entire extended family, and even I love this thing. It’s not a family heirloom so much as a family member.

And, are some ad execs in Cupertino really suggesting I replace all that with… an iPad?

I made the point about how fast Apple obsoletes things last time, so you know what? Let’s spot them that, and while we’re at it, let’s spot them how long we know that battery will keep working. Hell, let’s even spot them the “playing notes that sound like a piano” part of being a piano, just to be generous.

Are they seriously suggesting that I can set my 2-year old down on top of the iPad to take the camera from my dad to take a picture while my mom shows my 4-year old how to play chords? That we’re all going to stand in front of the iPad to get a group shot at thanksgiving? That the framed photos of the wedding are going to sit on top of the iPad? That the iPad is going to be something there will be tense negotiations about who inherits?

No, of course not.

What made that ad so infuriating was that they weren’t suggesting any such thing, because it never occurred to them. They just thought they were making a cute ad, but instead they (accidentally?) perfectly captured the zeitgeist.

One of the many reasons why people are fed up with “big tech” is that as “software eats the world” and tries to replace everything, it doesn’t actually replace everything. It just replaces the top line thing, the thing in the middle, the thing thats easy. And then abandons everything else that surrounds it. And it’s that other stuff, the people crowded around the piano, the photos, that really actually matters. You know, culture. Which is how you end up with this “stripping the copper out of the walls” quality the world has right now; it’s a world being rebuilt by people whose lives are so empty they think the only thing a piano does is play notes.

Crushed

What’s it look like when a company just runs out of good will?

I am, of course, talking about that ad Apple made and then apologized for where the hydraulic press smashes things down and reveals—the new iPad!

The Crush ad feels like a kind of inflection point. Because a few years ago, this would have gone over fine. Maybe a few grumps would have grouched about it, but you can imagine most people would have taken it in good humor, there would have been a lot of tweets on the theme of “look, what they meant was…”

Ahhh, that’s not how this one went? It’s easy to understand why some folks felt so angry; my initial response was more along the lines of “yeaaah, read the room.”

As more than one person pointed out, Apple’s far from the first company to use this metaphor to talk about a new smaller product; Nintendo back in the 90s, Nokia in ’08. And, look, first of all, “Nokia did it” isn’t the quality of defense you think it is, and second, I don’t know guys, maybe some stuff has happened over the last fifteen years to change the relationship artists have with big tech companies?

Apple has built up a lot of good will over the last couple of decades, mostly by making nice stuff that worked for regular people, without being obviously an ad or a scam, some kind of corporate nightmare, or a set of unserious tinkertoys that still doesn’t play sound right.

They’ve been withdrawing from that account quite a lot the last decade: weird changes, the entire app store “situation”, the focus on subscriptions and “services”. Squandering 20 years of built-up good will on “not fixing the keyboards.” And you couple that with the state of the whole tech industry: everyone knows Google doesn’t work as well as it used to, email is all spam, you can’t answer the phone anymore because a robot is going to try and rip you off, how many scam text messages thye get, Amazon is full of bootleg junk, etsy isn’t hand-made anymore, social media is all bots and fascists, most things that made tech fun or exciting a decade or more ago has rotted out. And then, as every other tech company falls over themselves to gut the entirety of the arts and humanities to feed them into their Plagiarism Machines so techbros don’t have to pay artists, Apple—the “intersection of technology and liberal arts”—goes and does this? Et tu?

I picture last week as the moment Apple looked down and realized, Wile E Coyote style, they they were standing out in mid-air having walked off the edge of their accumulated good will.

On the one hand, no, that’s not what they meant, it was misinterpreted. But on the other hand—yes, maybe it really was what they meant, the people making just hadn’t realized the degree to which they were saying the quiet part out loud.

Because a company smashing beautiful tools that have worked for decades to reveal a device that’ll stop being eligible for software updates in a few years is the perfect metaphor for the current moment.

Last Week In Good Sentences

It’s been a little while since I did an open tab balance transfer, so I’d like to tell you about some good sentences I read last week.

Up first, old-school blogger Jason Kottke links to a podcast conversation between Ezra Klein and Nilay Patel in The Art of Work in the Age of AI Production. Kottke quotes a couple of lines that I’m going to re-quote here because I like them so much:

EZRA KLEIN: You said something on your show that I thought was one of the wisest, single things I’ve heard on the whole last decade and a half of media, which is that places were building traffic thinking they were building an audience. And the traffic, at least in that era, was easy, but an audience is really hard. Talk a bit about that.

NILAY PATEL: Yeah first of all, I need to give credit to Casey Newton for that line. That is something — at The Verge, we used to say that to ourselves all the time just to keep ourselves from the temptations of getting cheap traffic. I think most media companies built relationships with the platforms, not with the people that were consuming their content.

“Building traffic instead of an audience” sums up the last decade and change of the web perfectly. I don’t even have anything to add there, just a little wave and “there you go.”

Kottke ends by linking out to The Revenge of the Home Page in the The New Yorker, talking about the web starting to climb back towards a pre-social media form. And that’s a thought thats clearly in the air these days, because other old school blogger Andy Baio linked to We can have a different web.

I have this theory that we’re slowly reckoning with the amount of cognitive space that was absorbed by twitter. Not “social media”, but twitter, specifically. As someone who still mostly consumes the web via his RSS reader, and has been the whole time, I’ve had to spend a lot of time re-working my feeds the last several months because I didn’t realize how many feeds had rotted away but I hadn’t noticed because those sites were posting update as tweets.

Twitter absorbed so much oxygen, and there was so much stuff that migrated from “other places” onto twitter in a way that didn’t happen with other social media systems. And now that twitter is mostly gone, and all that creativity and energy is out there looking for new places to land.

If you’ll allow me a strained metaphor, last summer felt like last call before the party at twitter fully shut down; this summer really feels like that next morning, where we’ve all shook off the hangover and now everyone is looking at each other over breakfast asking “okay, what do you want to go do now?”

Jumping back up the stack to Patel talking about AI for a moment, a couple of extra sentences:

But these models in their most reductive essence are just statistical representations of the past. They are not great at new ideas. […] The human creativity is reduced to a prompt, and I think that’s the message of A.I. that I worry about the most, is when you take your creativity and you say, this is actually easy. It’s actually easy to get to this thing that’s a pastiche of the thing that was hard, you just let the computer run its way through whatever statistical path to get there. Then I think more people will fail to recognize the hard thing for being hard.

(The whole interview is great, you should go read it.)

But that bit about ideas and reducing creativity to a prompt brings me to my last good sentences, in this depressing-but-enlightening article over on 404 media: Flood of AI-Generated Submissions ‘Final Straw’ for Small 22-Year-Old Publisher

A small publisher for speculative fiction and roleplaying games is shuttering after 22 years, and the “final straw,” its founder said, is an influx of AI-generated submissions. […] “The problem with AI is the people who use AI. They don't respect the written word,” [founder Julie Ann] Dawson told me. “These are people who think their ‘ideas’ are more important than the actual craft of writing, so they churn out all these ‘ideas’ and enter their idea prompts and think the output is a story. But they never bothered to learn the craft of writing. Most of them don't even read recreationally. They are more enamored with the idea of being a writer than the process of being a writer. They think in terms of quantity and not quality.”

And this really gets to one of the things that bothers me so much about The Plagiarism Machine—the sheer, raw entitlement. Why shouldn’t they get to just have an easy copy of something someone else worked hard on? Why can’t they just have the respect of being an artist, while bypassing the work it takes to earn it?

My usual metaphor for AI is that it’s asbestos, but it’s also the art equivalent of steroids in pro sports. Sure, you hit all those home runs or won all those races, but we don’t care, we choose to live in a civilization where those don’t count, where those are cheating.

I know several people who have become enamored with the Plagiarism Machines over the last year—as I imagine all of us do now—and I’m always struck by a couple of things whenever they accidentally show me their latest works:

First, they’re always crap, just absolute dogshit garbage. And I think to myself, how did you make it to adulthood without being able to tell what’s good or not? There’s a basic artistic media literacy that’s just missing.

Second, how did we get to the point where you’ve got the nerve to be proud that you were cheating?

Monday Snarkblog

I spent the weekend at home with a back injury letting articles about AI irritate me, and I’m slowly realizing how useful Satan is as a societal construct. (Hang on, this isn’t just the painkillers talking). Because, my goodness, I’m already sick of talking about why AI is bad, and we’re barely at the start of this thing. I cannot tell you how badly I want to just point at ChatGPT and say “look, Satan made that. It's evil! Don't touch it!”

Here’s some more open tabs that are irritating me, and I’ve given myself a maximum budget of “three tweets” each to snark on them:

Wherein Cory does a great job laying out the problems with common core and how we got here, and then blows a fuse and goes Full Galaxy Brain, freestyling a solution where computers spit out new tests via some kind of standards-based electronic mad libs. Ha ha, fuck you man, did you hear what you just said? That’s the exact opposite of a solution, and I’m only pointing it out because this is the exact crap he’s usually railing against. Computers don’t need to be all “hammer lfg new nails” about every problem. Turn the robots off and let the experts do their jobs.

I abandoned OpenLiteSpeed and went back to good ol’ Nginx | Ars Technica

So wait, this guy had a fully working stack, and then was all “lol yolo” and replaced everything with no metrics or testing—twice??

I don’t know what the opposite of tech debt is called, but this is it. There’s a difference between “continuous improvement” and “the winchester mystery house” and boy oh boy are were on the wrong side of the looking glass.

The part of this one that got me wasn’t where he sat on his laptop in the hotel on his 21st wedding anniversary trip fixing things, it was the thing where he had already decided to bring his laptop on the trip before anything broke.

Things can just be done, guys. Quit tinkering to tinker and spend time with your family away from screens. Professionalism means making the exact opposite choices as this guy.

AI Pins And Society’s Immune Responses

Apparently “AI Pins” are a thing now? Before I could come up with anything new rude to say after the last one, the Aftermath beat me to it: Why Would I Buy This Useless, Evil Thing?

I resent the imposition, the idea that since LLMs exist, it follows that they should exist in every facet in my life. And that’s why, on principle, I really hate the rabbit r1.

It’s as if the cultural immune response to AI is finally kicking in. To belabor the metaphor, maybe the social benefit of blockchain is going to turn out to have been to act as a societal inoculation against this kind of tech bro trash fire.

The increasing blowback makes me hopeful, as I keep saying.

Speaking of, I need to share with you this truly excellent quote lifted from jwz: The Bullshit Fountain:

I confess myself a bit baffled by people who act like "how to interact with ChatGPT" is a useful classroom skill. It's not a word processor or a spreadsheet; it doesn't have documented, well-defined, reproducible behaviors. No, it's not remotely analogous to a calculator. Calculators are built to be *right*, not to sound convincing. It's a bullshit fountain. Stop acting like you're a waterbender making emotive shapes by expressing your will in the medium of liquid bullshit. The lesson one needs about a bullshit fountain is *not to swim in it*.

What Might Be A Faint Glimmer Of Hope In This Whole AI Thing

As the Aftermath says, It's Been A Huge Week For Dipshit Companies That Either Hate Artists Or Are Just Incredibly Stupid.

Let’s look at that new Hasbro scandal one for a second. To briefly recap, they rolled out some advertising for the next Magic: The Gathering expansion that was blatantly, blatantly, AI generated. Which is bad enough on its own, but that’s incredibly insulting for a game as artist-forward as MTG. But then, let’s add some context. This is after a year where they 1) blew the whole OGL thing, 2) literally sent The Actual Pinkertons after someone, 3) had a whole different AI art scandal for a D&D book that caused them to have to change their internal rules, 4) had to issue an apology for that stuff in Spelljammer, and 5) had a giant round of layoffs that, oh by the way what a weird coincidence, gutted the internal art department at Wizards. Not a company whose customers are going to default to good-faith readings of things!

And then, they lied about it! Tried to claim it wasn’t AI, and then had to embarrassingly walk it all back.

Here’s the sliver of hope I see in this.

First, the blowback was surprisingly large. There’s a real “we’re tired of this crap” energy coming from the community that wasn’t there a year ago.

More importantly, through, Hasbro knew what the right answer was. There wasn’t any attempt to defend or justify how “AI art is real art we’re just using new tools”; this was purely the behavior of a company that was trying to get away with something. They knew the community was going to react badly. It’s bad that they still went ahead, but a year ago they wouldn’t have even tried to hide it.

But most importantly (to me), in all the chatter I saw over the last few days, no one was claiming that “AI” “art” was as good as real art. A year ago, it would have been all apologists claiming that the machine generated glurge was “just as good” and “it’s still real art”, and “it’s just as hard to make this, just different tools”, “this is the future”, and so on.

Now, everyone seems to have conceded the point that the machine generated stuff is inherently low quality. The defenses I saw all centered around the fact that it was cheap and fast. “It’s too cheap not to use, what can you do?” seemed to be the default view from the defenders. That’s a huge shift from this time last year. Like how bitcoin fans have mostly stopped pretending crypto is real money, generative AI fans seem to be giving up on convincing us that it’s real art. And the bubble inches closer to popping.

End Of Year Open Tab Bankruptcy Roundup Jamboree, Part 2: AI & Other Tech

I’m declaring bankruptcy on my open tabs; these are all things I’ve had open on my laptop or phone over the last several months, thinking “I should send this to someone? Or Is this a blog post?” Turns out the answer is ‘no’ to both of those, so here they are. Day 2: AI and Other Various Tech Topics

A Coder Considers the Waning Days of the Craft | The New Yorker

At one point, I had a draft of Fully Automated Insults to Life Itself with a whole bunch of empty space about 2/3 down with “coding craft guy?” written in the middle. I didn’t end up using it because, frankly, I didn’t have anything nice to say, and, whatever. Then I had two different family members ask me about this over the holidays in a concerned tone of voice, so okay, lets do this.

This guy. This freakin’ guy. Let’s set this up. We have New Yorker article where a programmer talks about how he used to think programming was super-important, but now with the emergence of “the AIs”, maybe his craft is coming to an end. It’s got all the things that usually bother me about AI articles: bouncing back and forth between “look at this neat toy!” and “this is utterly inevitable and will replace all of us”, a preemptively elegiac tone, a total failure to engage with any of the social, moral, or political issues around “AI”, that these “inevitable changes” are the direct result of decisions being made on purpose by real people with an ideology and an agenda. All that goes unacknowledged! That’s what should bother me.

But no, what actually bothered me was that. I spent the whole time reading this thinking “I’d bounce this guy in an interview so fast.” Because he’s incredibly bad at his chosen profession. His examples of what he used GPT for are insane. Let’s go to the tape!

At one point, we wanted a command that would print a hundred random lines from a dictionary file. I thought about the problem for a few minutes, and, when thinking failed, tried Googling. I made some false starts using what I could gather, and while I did my thing—programming—Ben told GPT-4 what he wanted and got code that ran perfectly.

Fine: commands like those are notoriously fussy, and everybody looks them up anyway. It’s not real programming.

Wait, what? What? WHAT! He’s right, that’s not real programming, but a real programmer can knock that out faster than they can write. No one who writes code for a living should have to think about this for any length of time. This is like a carpenter saying that putting nails in straight isn’t real carpentry. “Tried Googling?” Tried? But then he follows up with:

A few days later, Ben talked about how it would be nice to have an iPhone app to rate words from the dictionary. But he had no idea what a pain it is to make an iPhone app. I’d tried a few times and never got beyond something that half worked. I found Apple’s programming environment forbidding. You had to learn not just a new language but a new program for editing and running code; you had to learn a zoo of “U.I. components” and all the complicated ways of stitching them together; and, finally, you had to figure out how to package the app. The mountain of new things to learn never seemed worth it.

There are just under 2 million iOS apps, all of them written by someone, usually many someones, who could “figure it out”. But this guy looked into it “ a few times”, and the fact that it was too hard for him was somehow… not his fault? No self-reflection, there? “You had to learn not just a new language but a new program…”? Any reasonably senior programmer is fluent in at least half of the TIOBE top 20, uses half-a dozen IDEs or tools at once.

But that last line in the quote there. That last line is what haunts me. “The mountain of new things to learn never seemed worth it.” Every team I’ve ever worked on has had one of these guys—and they are always men—half-ass, self-taught dabblers, bush league, un-professional. Guys who steadfastly refuse to learn anything new after they made it into the field. Later, after all the talk about school, it turns out his degree is in economics; he couldn’t even be bothered not to half-ass it while he was literally paying people to teach him this stuff. The sheer nerve of someone who couldn’t even be bothered to learn what he needed to know to get a degree to speak for the rest of us.

I’ve been baffled by the emergence of GPT-powered coding assistants—why would someone want a tool that hallucinates possible-but-untested solutions? That are usually wrong? And that by defintion you don’t know how to check? And I finally understand, it turns out it’s the economists that decided to go be shitty programmers instead. Who uses GPT? People who’ve been looking for shortcuts their whole life, and found a new one. Got it.

And look, I know—I know— this is bothering me way more than it should. But this attitude—learning new things is too hard, why should I care about the basics—is endemic in this industry. And that’s the job. Thats why we got into this in the first place. Instead, we’re getting dragged into a an industry-wide moral hazard because Xcode’s big ass Play Button is too confusing?

I’ll tell you what this sharpened up, though. I have a new lead-off question for technical interviews: “Tell me the last thing you learned.”

looonnng exhale

Look! More links!

Defective Accelerationism a concise and very funny summary of what a loser Sam Altman is; I cannot for the life of me remember where I saw the link to this?

Tech Billionaires Need to Stop Trying to Make the Science Fiction They Grew Up on Real | Scientific American; over in SciAm, Charlie Stross writes a cleaned up version of his talk I linked to back in You call it the “AI Nexus”, we call it the “Torment Pin”.

Ted Chiang: Fears of Technology Are Fears of Capitalism

Finally, we have an amusing dustup over in the open source world: Michael Tsai - Blog - GitHub Code Search Now Requires Logging In.

The change github made is fine, but the open source dorks are acting like github declared war on civilization itself. Click through to the github issue if you want to watch the most self-important un-self-aware dinguses ruin their own position, by basically freaking out that someone giving them something for free might have conditions. WHICH IS PRETTY RICH coming from the folks that INVENTED “free with conditions.” I accidentally spent half an hour reading it muttering “this is why we always lose” under my breath.

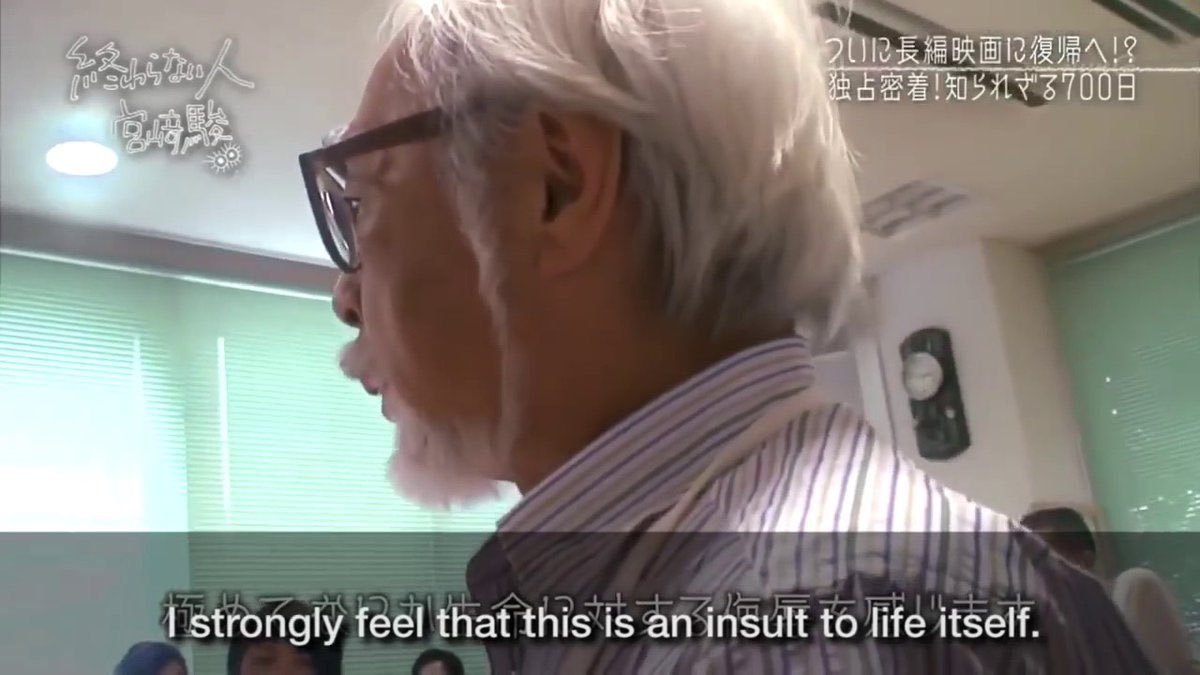

Fully Automated Insults to Life Itself